A Comprehensive Introduction to Salt

Introduction

Recent advances in IT infrastructures, namely for Cloud-based Services, where scalability, elasticity and availability are key factors, have also seen advances in the way Management and Administration of these critical infrastructures are achieved, be it on log analysis and systems monitoring or on configuration management and automation. This is a rising subject, with exponential development evolution as new tools with different approaches have emerged in the last few years. [2]

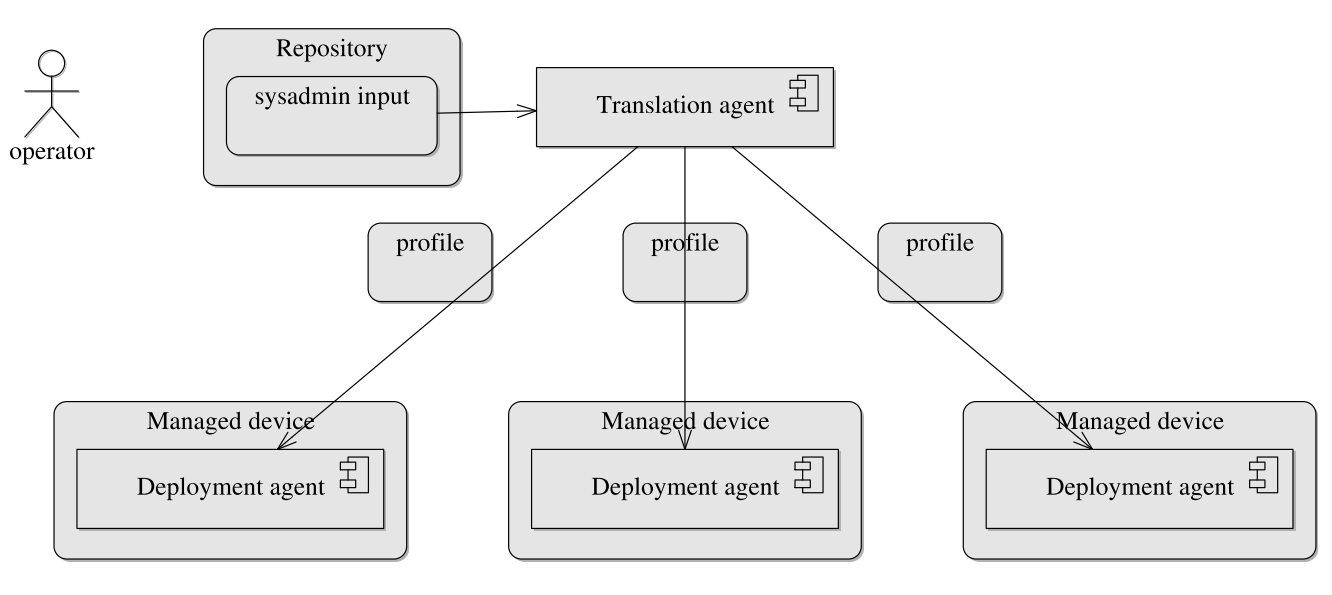

Delaet et al. [3] provide a framework for evaluating different System Configuration tools. They also define a conceptual architecture for these tools, illustrated in Figure 1. In essence, this type of tool is composed by a master element (typically residing in a deployment node) containing a set of specialized modules that translate through some form of manifest files, the specific configuration for each component of the remote managed nodes of the infrastructure. Each remote managed node, through some form of local agent conveys to the master detailed information about the node and executes configuration actions determined by the master. The master compiles in a repository a catalog (inventory) with information on the nodes and on how they should be configured.

In their paper, John Benson et al. [1], address the challenge of building an effective multi-cloud application deployment controller as a customer add-on outside of a cloud utility service using automation tools such as Ansible (ansible.com), SaltStack (saltstack.com) and Chef (www.chef.io) and compare them to each other as well as with the case where there is no automation framework just plain shell code.

The authors compare those three tools in terms of performance in executing three sets of tasks (installing a chemistry software package, installing an analytics software package, installing both software packages), the amount of code needed to execute those tasks and different features they have or do not have, such as a GUI and the need for an agent.

Ebert, C. et al. [4] address and discuss this recent organization culture coined DevOps, namely the toolset used at different phases, from Build, to Deployment, to Operations. The authors conclude that "DevOps is impacting the entire software and IT industry. Building on lean and agile practices, DevOps means end-to-end automation in software development and delivery."[4], with which I agree on. The authors also state that "Hardly anybody will be able to approach it with a cookbook-style approach, but most developers will benefit from better connecting development and operations."[4] with which I do not totally agree with, as I will try to demonstrate, by showing that a reactive infrastructure can be created to act autonomously on certain events, without leaving the cookbook-like approach, but through a set of triggered events that can be used to automate software development and delivery. There are already tools, such as SaltStack that makes possible for certain events to intelligently trigger actions, allowing therefore IT operators to create autonomic systems and data centers instead of just static runbooks. The authors also state that a "mutual understanding from requirements onward to maintenance, service, and product evolution will improve cycle time by 10 to 30 percent and reduce costs up 20 percent."[4] pointing out that the major drivers are fewer requirements changes, focused testing, quality assurance, and a much faster delivery cycle with feature-driven teams. They also point out the large dynamics of this DevOps culture as "each company needs its own approach to achieve DevOps, from architecture to tools to culture."[4]

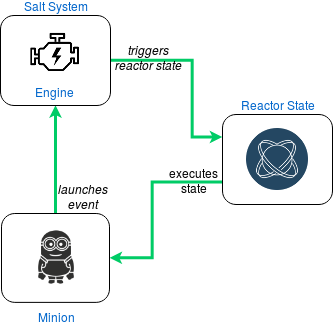

Saltstack took a different approach for its architecture, illustrated in , when compared to the architecture defined by Delaet et al. [3]. Comparing both, there are some similarities but it can also be seen that there is a bidirectional channel of communication between the managed devices and the Salt Master which corresponds to an Event Bus that connects to different parts of the tool. The Runner is used for executing modules specific to the Salt Master but can also provide information about the managed devices. The Reactor is responsible for receiving events and corresponding them to the appropriate state or even to fire another event as a consequence of the former. As stated in their documentation, "Salt Engines are long-running, external system processes that leverage Salt"(https://docs.saltstack.com), allowing to integrate external tools into the Salt system, for example to send to the salt Event Bus messages of a channel on the Slack (slack.com) communication tool (see https://github.com/saltstack/salt/blob/develop/salt/engines/slack.py).

Architecture

For this work we will be using Saltstack's orchestration tool Salt. Its requirements for a device to be managed by it can be as little as having a REpresentational State Transfer (REST) API through which one can interact with. Its overall architecture can be seen in . In this section we will see the architectural, network and hardware requirements, as well as Salt's software requirements and how to use Salt as a full infrastructure management tool.

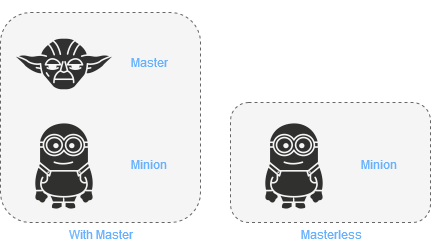

The minimum configuration for Salt is one machine, which takes the role of either Master and Minion, or just a Minion, with no master see . Although this does not achieve much, it still allows to test operations, be it configuration management, reactions to certain events, monitoring of processes or to just do some tests with Salt.

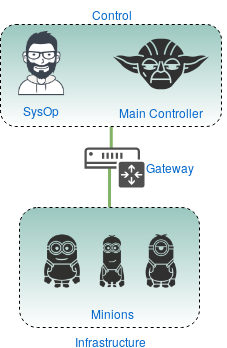

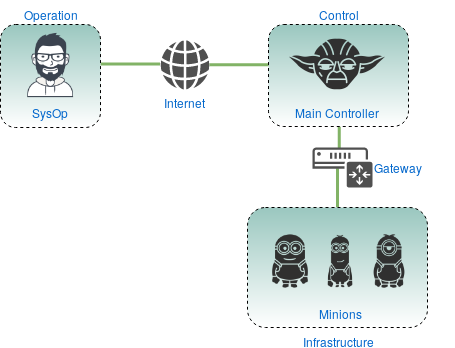

- Consider the two scenarios represented in Figure 4 and Figure 5:

- an Operator within the Control layer

- an Operator outside the Control layer, with Control and Infrastructure layers behind a Network Address Translation (NAT)

In the first scenario, the Operator is either within the Main Controller itself or in the network neighbourhood, meaning that it has network access within the private network of which the Main Controller is part of.

In the second scenario, the Operator and the Main Controller are in distinct networks, meaning that the Operator with have to have external access to the infrastructure, either using the Main Controller has a bastion server, or by assigning the minions with a public Internet Protocol (IP) address. We will see that as public IP addresses are not that abundant, using a bastion becomes a necessity. The Salt administration tool, by Saltstack, has several key components which make it a complete framework for managing and administering an IT infrastructure. In each of the following subsections we will see what they are used for and how to make use of them.

"Running pre-defined or arbitrary commands on remote hosts, also known as remote execution, is the core function of Salt" [5]. Remote execution in Salt is achieved through execution modules and returners. Salt also contains a configuration management framework, which complements the remote execution functions by allowing more complex and interdependent operations. The framework functions are executed on the minion's side, allowing for scalable, simultaneous configuration of a great number of minion targets.

"The Salt Event System is used to fire off events enabling third party applications or external processes to react to behavior within Salt" [6]. These events can be launched from within the Salt infrastructure or from applications residing outside of it. To monitor non-Salt processes one can use the beacon system which "allows the minion to hook into a variety of system processes and continually monitor these processes. When monitored activity occurs in a system process, an event is sent on the Salt event bus that can be used to trigger a reactor" [7].

The pinnacle of the two previous components is using that information to trigger actions in response to those kinds of events. In Salt we have the reactor system "a simple interface to watching Salt's event bus for event tags that match a given pattern and then running one or more commands in response." [8].

Most of the information may be static, but it can belong to each individual minion. This information can be either gathered from the minion itself or attributed to it by the Salt system. The first "is called the grains interface, because it presents salt with grains of information. Grains are collected for the operating system, domain name, IP address, kernel, OS type, memory, and many other system properties." [9]. The latter is called the pillar, which "is an interface for Salt designed to offer global values that can be distributed to minions" [10], in a private way relative to every other minion, if need be.

Directory Structures

There are two essential locations for salt related files (excluding service files):

- /etc/salt

- /srv/salt

In /etc/salt we have the configuration files for salt master and minion, as well as the keys for known minions (if it is a salt master machine) and the key for the salt master (if it is a salt minion machine).

root@master:~# tree /etc/salt

/etc/salt

├── master

├── minion

├── minion_id

└── pki

├── master

│ ├── master.pem

│ ├── master.pub

│ ├── minions

│ │ ├── minion1

│ │ ├── minion2

│ │ └── minion3

│ ├── minions_autosign

│ ├── minions_denied

│ ├── minions_pre

│ └── minions_rejected

│

│

│

└── minion

├── minion_master.pub

├── minion.pem

└── minion.pub/etc/salt/master: master config file

/etc/salt/minion: minion config file

/etc/salt/minion_id: minion identifier file

/etc/salt/pki/master/master.pem: master private key

/etc/salt/pki/master/master.pub: master public key

/etc/salt/pki/minion/minion_master.pub: master public key

/etc/salt/pki/minion/minion.pub: minion public key

/etc/salt/pki/minion/minion.pem: minion private key

In /srv/salt we store the state, pillar and reactor files, we will read more about this further ahead.

Configuration Files

We will now see basic, and some optional, settings for both salt master and salt minion.

/etc/salt/master

| |

From reading this configuration file we can see that we will be listening on all interfaces using the default publish port, running as user root, keep job information for a week, increasing the default timeout for salt commands, and logging to the system's logger with a custom-defined format:

[TIMESTAMP] salt-master[PID] SALT_FUNCTION: MESSAGEBesides this we also set up the base directories for state files, pillar data and reactor states. We will read about this further in this post, in Configuration Management, Storing and Accessing Data and Event System, respectively.

/etc/salt/minion

| |

As we can see, the most basic configuration file for a salt minion is its salt master network location, it can be configured via a fqdn, a hostname defined in /etc/hosts/ file or via IP address.

We can also see the minion_id setting which forces a minion identifier, instead of letting the salt-minion choose one based on the machine's hostname.

Subsystem Files

Like we saw before, we configure all of the salt subsystem files within /srv/salt/, here we have a tree view of its subdirectories (not ordered on purpose):

root@master:~# tree /srv/salt

/srv/salt

└── states

│ └── base

│ ├── ntp

│ │ ├── etc

│ │ │ └── ntp.conf.tmpl

│ │ └── init.sls

│ └── top.sls

├── pillar

│ └── base

│ ├── ntp

│ │ └── init.sls

│ └── top.sls

└── reactor

└── auth-pending.slsThe Top File

An infrastructure can be seen as being an applicational stack by itself, by having groups of different machines set up in small clusters, each cluster performing a sequence of tasks. The Top file is were the attribution of configuration role(s) and Minion(s) is made. This kind of file is used both for State and Pillar systems. Top files can be structurally seen has having three levels:

| Environment: | (ϵ) A directory structure containing a set of SaLt State (SLS) files. |

|---|---|

| Target: | (θ) A predicate used to target Minions. |

| State files: | (σ) A set of SLS files to apply to a target. Each file describes one or more states to be executed on matched Minions. |

These three levels relate in such a way that (θ ∈ ϵ) , and (σ ∈ θ). Reordering these two relations we have that:

- Environments contain targets

- Targets contain states

Putting these concepts together, we can describe a scenario (top file) in which the state defined in the SLS file ntp/init.sls is enforced to all minions.

top.sls:

| |

Remote Execution

Before delving into the more intricate orchestration-like functionalities of salt, let us use the most (probably) basic operation of salt, which you probably will use a lot.

The function is cmd.run, made available from the cmd execution module (see [11] for a list of all available execution modules). And we can use it like this, from a salt master environment:

root@master:~# salt \* cmd.run 'hostname && pwd && ls -la'This will run the command, or sequence of commands, in every targeted minion and return its result. You can also specify the shell you want to use with the shell argument.

root@master:~# salt \* cmd.run 'hostname && ls -la' shell=/bin/bash

minion3:

minion3.local

/root

total 40

drwx------ 7 root root 4096 Sep 27 16:35 .

drwxr-xr-x 23 root root 4096 Oct 2 07:00 ..

-rw------- 1 root root 2336 Sep 29 14:11 .bash_history

-rw-r--r-- 1 root root 3201 Sep 3 14:19 .bashrc

drwx------ 3 root root 4096 Sep 19 15:21 .cache

drwx------ 3 root root 4096 Sep 27 16:35 .config

drwxr-xr-x 2 root root 4096 Aug 17 17:00 .nano

-rw-r--r-- 1 root root 148 Aug 17 2015 .profile

drwx------ 2 root root 4096 Sep 19 15:21 .ssh

drwxr-xr-x 3 root root 4096 Sep 6 16:43 .virtualenvs

minion1:

master.local

/root

total 40

drwx------ 7 root root 4096 Sep 27 16:35 .

drwxr-xr-x 23 root root 4096 Oct 2 07:00 ..

-rw------- 1 root root 2336 Sep 29 14:11 .bash_history

-rw-r--r-- 1 root root 3201 Sep 3 14:19 .bashrc

drwx------ 3 root root 4096 Sep 19 15:21 .cache

drwx------ 3 root root 4096 Sep 27 16:35 .config

drwxr-xr-x 2 root root 4096 Aug 17 17:00 .nano

-rw-r--r-- 1 root root 148 Aug 17 2015 .profile

drwx------ 2 root root 4096 Sep 19 15:21 .ssh

drwxr-xr-x 3 root root 4096 Sep 6 16:43 .virtualenvs

minion2:

minion2.local

/root

total 40

drwx------ 7 root root 4096 Sep 27 16:35 .

drwxr-xr-x 23 root root 4096 Oct 2 07:00 ..

-rw------- 1 root root 2336 Sep 29 14:11 .bash_history

-rw-r--r-- 1 root root 3201 Sep 3 14:19 .bashrc

drwx------ 3 root root 4096 Sep 19 15:21 .cache

drwx------ 3 root root 4096 Sep 27 16:35 .config

drwxr-xr-x 2 root root 4096 Aug 17 17:00 .nano

-rw-r--r-- 1 root root 148 Aug 17 2015 .profile

drwx------ 2 root root 4096 Sep 19 15:21 .ssh

drwxr-xr-x 3 root root 4096 Sep 6 16:43 .virtualenvs

root@master:~#Another well used execution module is pkg, it will try to identify the package system present in the minion's system and use it to perform the requested operations.

root@master:~# salt minion\* pkg.latest_version postfix

minion1:

3.1.0-3ubuntu0.3

minion2:

3.1.0-3ubuntu0.3

minion3:

3.1.0-3ubuntu0.3

root@master:~# salt minion\* pkg.install postfix

minion1:

----------

postfix:

----------

new:

3.1.0-3ubuntu0.3

old:

(... suppressed output ...)

minion3:

----------

postfix:

----------

new:

3.1.0-3ubuntu0.3

old:

(... suppressed output ...)

minion2:

----------

postfix:

----------

new:

3.1.0-3ubuntu0.3

old:

(... suppressed output ...)Configuration Management

Remote execution is very useful, however we may want more complex scenarios to occur, then we can make use of SLS files, states, and state modules [12].

From the tree view seen in Subsystem Files and the configuration of the file_roots option, all of our available states are to be placed in /srv/salt/states/base.

SLS files are mainly written in YAML Ain't Markup Language (YAML) [13] and like most salt related files, can be templated using Jinja [14], by default.

ntp/init.sls:

| |

ntp/etc/ntp.conf.tmpl:

| |

This salt state file, when applied, will:

- install the ntp package, using the system's package manager

- create/change the file /etc/ntp/ntp.conf, on the minion, according to the contents of the /srv/salt/states/base/ntp/etc/ntp.conf.tmpl, on master

- make sure the ntp service is stopped if there are changes to the previously managed file.

- make sure the ntp service is running and enabled, restarting it if there were changes to the managed file (it should not restart because of the the ntp-shutdown state.

To apply a state we use the state execution module, and its function apply. One key difference between using execution modules and state modules is the possibility of using the keyword test that, when True, will not effectively apply the state but rather it will show what it would do and, in our case, which changes it would apply.

root@master:~# salt --state-verbose=True minion2 state.apply ntp test=True

minion2:

----------

ID: ntp-pkg

Function: pkg.installed

Name: ntp

Result: True

Comment: All specified packages are already installed

Started: 15:26:10.712651

Duration: 928.968 ms

Changes:

----------

ID: ntp-pkg

Function: file.managed

Name: /etc/ntp.conf

Result: None

Comment: The file /etc/ntp.conf is set to be changed

Started: 15:26:11.664815

Duration: 63.205 ms

Changes:

----------

diff:

---

+++

@@ -1,53 +1,20 @@

-# /etc/ntp.conf, configuration for ntpd; see ntp.conf(5) for help

+# This file managed by Salt, do not edit

+#

+#

+# With the default settings below, ntpd will only synchronize your clock.

-driftfile /var/lib/ntp/ntp.drift

-

-

-# Enable this if you want statistics to be logged.

-#statsdir /var/log/ntpstats/

-

-statistics loopstats peerstats clockstats

-filegen loopstats file loopstats type day enable

-filegen peerstats file peerstats type day enable

-filegen clockstats file clockstats type day enable

-

-# Specify one or more NTP servers.

# Use servers from the NTP Pool Project. Approved by Ubuntu Technical Board

# on 2011-02-08 (LP: #104525). See http://www.pool.ntp.org/join.html for

# more information.

-server ntp1.somewhere.in.time

-server ntp2.somewhere.in.time

+server ntp1.example.com

+server ntp2.example.com

-# Use Ubuntu's ntp server as a fallback.

-server ntp.ubuntu.com

-# Access control configuration; see /usr/share/doc/ntp-doc/html/accopt.html for

-# details. The web page <http://support.ntp.org/bin/view/Support/AccessRestrictions>

-# might also be helpful.

-#

-# Note that "restrict" applies to both servers and clients, so a configuration

-# that might be intended to block requests from certain clients could also end

-# up blocking replies from your own upstream servers.

-

-# By default, exchange time with everybody, but don't allow configuration.

-restrict -4 default kod notrap nomodify nopeer noquery

-restrict -6 default kod notrap nomodify nopeer noquery

-

-# Local users may interrogate the ntp server more closely.

+# Only allow read-only access from localhost

+restrict default noquery nopeer

restrict 127.0.0.1

restrict ::1

-# Clients from this (example!) subnet have unlimited access, but only if

-# cryptographically authenticated.

-#restrict 192.168.123.0 mask 255.255.255.0 notrust

-

-

-# If you want to provide time to your local subnet, change the next line.

-# (Again, the address is an example only.)

-#broadcast 192.168.123.255

-

-# If you want to listen to time broadcasts on your local subnet, de-comment the

-# next lines. Please do this only if you trust everybody on the network!

-#disable auth

-#broadcastclient

+# Location of drift file

+driftfile /var/lib/ntp/ntp.drift

----------

ID: ntp-pkg

Function: service.running

Name: ntp

Result: None

Comment: Service ntp is set to start

Started: 15:26:11.729479

Duration: 134.939 ms

Changes:

----------

ID: ntpdate

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 15:26:11.864881

Duration: 25.952 ms

Changes:

Summary for minion2

------------

Succeeded: 4 (unchanged=2, changed=1)

Failed: 0

------------

Total states run: 4

Total run time: 1.153 sNow for the actual application of the state

----------

ID: ntp-pkg

Function: pkg.installed

Name: ntp

Result: True

Comment: All specified packages are already installed

Started: 15:30:11.488468

Duration: 902.224 ms

Changes:

----------

ID: ntp-pkg

Function: file.managed

Name: /etc/ntp.conf

Result: True

Comment: File /etc/ntp.conf updated

Started: 15:30:12.394500

Duration: 94.999 ms

Changes:

----------

diff:

---

+++

@@ -1,53 +1,20 @@

-# /etc/ntp.conf, configuration for ntpd; see ntp.conf(5) for help

+# This file managed by Salt, do not edit

+#

+#

+# With the default settings below, ntpd will only synchronize your clock.

-driftfile /var/lib/ntp/ntp.drift

-

-

-# Enable this if you want statistics to be logged.

-#statsdir /var/log/ntpstats/

-

-statistics loopstats peerstats clockstats

-filegen loopstats file loopstats type day enable

-filegen peerstats file peerstats type day enable

-filegen clockstats file clockstats type day enable

-

-# Specify one or more NTP servers.

# Use servers from the NTP Pool Project. Approved by Ubuntu Technical Board

# on 2011-02-08 (LP: #104525). See http://www.pool.ntp.org/join.html for

# more information.

-server ntp1.somewhere.in.time

-server ntp2.somewhere.in.time

+server ntp1.example.com

+server ntp2.example.com

-# Use Ubuntu's ntp server as a fallback.

-server ntp.ubuntu.com

-# Access control configuration; see /usr/share/doc/ntp-doc/html/accopt.html for

-# details. The web page <http://support.ntp.org/bin/view/Support/AccessRestrictions>

-# might also be helpful.

-#

-# Note that "restrict" applies to both servers and clients, so a configuration

-# that might be intended to block requests from certain clients could also end

-# up blocking replies from your own upstream servers.

-

-# By default, exchange time with everybody, but don't allow configuration.

-restrict -4 default kod notrap nomodify nopeer noquery

-restrict -6 default kod notrap nomodify nopeer noquery

-

-# Local users may interrogate the ntp server more closely.

+# Only allow read-only access from localhost

+restrict default noquery nopeer

restrict 127.0.0.1

restrict ::1

-# Clients from this (example!) subnet have unlimited access, but only if

-# cryptographically authenticated.

-#restrict 192.168.123.0 mask 255.255.255.0 notrust

-

-

-# If you want to provide time to your local subnet, change the next line.

-# (Again, the address is an example only.)

-#broadcast 192.168.123.255

-

-# If you want to listen to time broadcasts on your local subnet, de-comment the

-# next lines. Please do this only if you trust everybody on the network!

-#disable auth

-#broadcastclient

+# Location of drift file

+driftfile /var/lib/ntp/ntp.drift

----------

ID: ntp-pkg

Function: service.running

Name: ntp

Result: True

Started: 15:30:12.587597

Duration: 49.742 ms

Changes:

----------

ID: ntpdate

Function: pkg.installed

Result: True

Comment: All specified packages are already installed

Started: 15:30:12.637827

Duration: 29.567 ms

Changes:

Summary for minion2

------------

Succeeded: 4 (changed=1)

Failed: 0

------------

Total states run: 4

Total run time: 1.077 sNow if we apply the ntp state again we will see no changes, and no service stop/start.

SLS files can include other SLS files, use externally generated data and depend on states declared on included SLS files.

Each state can require other state completion, be required in other states, watch a certain state, execute unless a given command returns True, execute only if a certain command returns True and more. [2] [15]

Storing and Accessing Data

When planning and implementing this type of orchestration, it is useful to have information that can be shared between states, and which is known only to the intended recipients (i.e: specific minions).

In salt we have the Pillar, Its configuration is similar to the one used by States, as we saw in Subsystem Files its files are located in /srv/salt/pillar/base.

The way the information is defined and assigned is similar to states, but instead of defining State Module executions we define information in the form of YAML, as shown

/srv/salt/pillar/base/ntp/init.sls:

| |

and we define the attributions of data in the corresponding pillar top file, where all minions are assigned information regarding Network Time Protocol (NTP) server domain names and their NTP service configuration file.

/srv/salt/pillar/base/top.sls:

| |

This information can be used within states or even template files, using the pillar keyword.

Taking the previous SLS file and its managed template, we can now dynamically fill NTP related info.

ntp/init.sls:

| |

ntp/etc/ntp.conf.tmpl:

| |

This example could be further enhanced, let us try and make this able to configure ntp servers on a windows machine. For that we need to use another data source in the salt system, the grains, which is generated by the minion itself.

root@master:~# salt win\* grains.get os

windows1:

Windows

root@master:~#Interesting, so now we can have selective behaviour in our state:

ntp/etc/ntp.conf.tmpl:

| |

And now if we apply the state to the windows machine:

root@master:~# salt win\* state.apply ntp testEvent System

In Introduction we saw in Figure 2 a general view of the Salt System, which has a Reactor element, this element is part of a more broad system, the Event System (represented in Figure 6 which is "used to fire off events enabling third party applications or external processes to react to behavior within Salt" [6].

The Event System has two main components:

| Event Socket: | from where events are published |

|---|---|

| Event Library: | used for listening to events and forward them to the salt system |

"The event system is a local ZeroMQ PUB interface which fires salt events. This event bus is an open system used for sending information notifying Salt and other systems about operations" [8]. This associates SLS files to event tags on the master, which the SLS files in turn represent reactions.

This means that the reactor system has two steps to work properly. First, the reactor key needs to be configured in the master configuration file, this associates event tags SLS reaction files. Second, these reaction files use a YAML data structure similar to the state system to define which reactions are to be executed.

/etc/salt/master:

| |

this entry means that for every 'salt/auth' event, the reactor state /srv/salt/reactor/auth-pending.sls should be executed. Let us see what that reactor state actually does. [8]

auth-pending.sls:

| |

"In this SLS file, we say that if the key was rejected we will delete the key on the master and then also tell the master to ssh in to the minion and tell it to restart the minion, since a minion process will die if the key is rejected. We also say that if the key is pending and the id starts with ink we will accept the key. A minion that is waiting on a pending key will retry authentication every ten seconds by default." [8]

Conclusion

In this post we saw from where some of the system configuration tools methods come from, and the similarities between then and now. We briefly explored the content management and orchestration tool Salt, saw how to execute commands remotely via the cmd.run execution module function, how to orchestrate more complex scenarios with the state.apply state module function, how to generate, share and fetch static and dynamic information via the grains and the pillar subsystems, and we also saw how to catch certain events and react to them via the Event System. Hope this was useful, and that now you have some grasp of what is salt and how it can be powerful to plan and manage an IT infrastructure. Feel free to use the comment box below to ask any questions.

References

| [1] | J. O. Benson, J. J. Prevost, and P. Rad, Survey of automated software deployment for computational and engineering research, 10th Annual International Systems Conference, SysCon 2016 - Proceedings, 2016. |

| [2] | (1, 2)

|

| [3] | (1, 2)

|

| [4] | (1, 2, 3, 4, 5)

|

| [5] | --, Remote Execution - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [6] | (1, 2) --, Event System - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [7] | --, Beacons - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [8] | (1, 2, 3, 4) --, Reactor System - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [9] | --, Grains - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [10] | --, Pillar - Saltstack Documentation - v2018.3.2, 2018. [link] |

| [11] | --, List of execution modules - Saltstack Documentation - v2018.3.2, 2018 [link] |

| [12] | --, List of state modules - Saltstack Documentation - v2018.3.2, 2018 [link] |

| [13] | --, YAML website [link] |

| [14] | --, Jinja2 website [link] |

| [15] | --, Requisites and Other Global State Arguments - Saltstack Documentation - v2018.3.2, 2018 [link] |

Tags: linux python devops saltstack configuration management

Related Content

- Slurm in Ubuntu Clusters - Part 2

- Slurm in Ubuntu Clusters - Part 1

- Using Python Virtual Environments with Slurm

- UEFI via software RAID with mdadm in Ubuntu 16.04

- About